#5: Being ambitious with science reform

This week: How to fix peer review, access data, rethink Alzheimer's research, and save over a million lives.

This is my fifth post of Scientific Discovery, a weekly newsletter where I’ll share great new scientific research that you may have missed.

#1: Peer review is essential to science, and that’s why it needs reform

Article: Real peer review has never been tried

We launched a new issue of Works in Progress yesterday, and it includes a new article by me on how to fix peer review.1

It’s a long article, but as the message is important, here’s a summary:

Before peer review, scientists published in journals because they were rapid platforms to circulate research – with publication taking around a week at Nature in the 1950s, for example. These days, that process takes nine months on average (and close to three years in economics).

Peer review was only really adopted by journals in the 1970s and ‘80s, around the time and after they were bought by big commercial publishing companies. This happened because journals received far more submissions than they could print – peer review helped with curation and protected their reputations.

Today, we recognise that peer review is a crucial part of how we do science; it’s how we recognise flaws, how we understand research from outside our expertise, how we improve its quality.

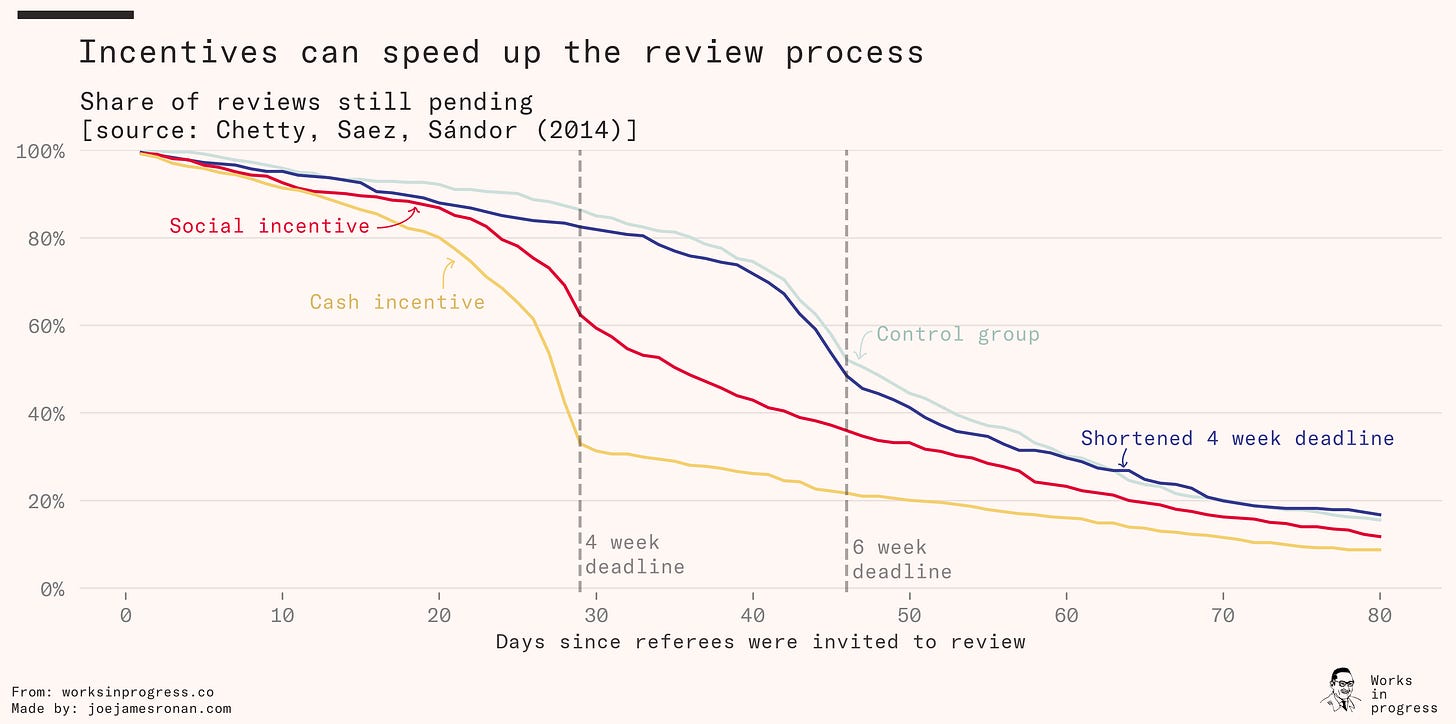

There are many changes that journals can make to speed up the process without compromising on quality. Experiments show that cash rewards for reviewers work. But we could also build centralized platforms to track the time and interests of reviewers – and for researchers to submit their work, be matched with reviewers across journals, and have studies published smoothly.

Some people will think these ideas already represent big shifts to the current model of scientific publishing. I don’t think they’re ambitious enough.

Without being asked to, some scientists have developed tools and platforms to fill in the gaps left by journals: software to check the statistics of studies and images for manipulation, websites to share data and code, forums to comment on fabrication and plagiarism in research. These, too, are part of ‘peer review’. But they are tasks rarely performed by journals.

Reviews are, in my view, not something that should happen behind closed doors, not left to journals. Not when so much research and peer review already happens outside of them – on preprints, forums, social media and blogs.

I think the future is about academia and industry treating peer review as a research output – investing in teams and institutions that can develop new tools and platforms to take it further, and adapt it to the way science is produced by different fields and in the future.

That’s all in my article and, if you enjoy it, I hope you share it! (And to go along with it, you may also like this article I co-wrote last year on how to speed up science through the division of labour.)

#2: Data is rarely ‘available on request’

Say you were reading research and wanted to check the data, compare it to your own, or build upon it. Journals today will do a little to help you out. You’ll often see a line saying that ‘data is available upon reasonable request’ along with a contact email for one of the authors.

If you took this route – and contacted the authors – how often would you actually get the data?

Hardly ever. That’s the answer according this new study, where the researchers contacted authors of a few thousand papers across hundreds of journals. These were people who actually stated their data would be available on request. But most didn’t respond and some actually declined to share their data, meaning that in the end only 6.8% provided it.

Often the assumption is that researchers don’t share data because they’re unwilling to be double-checked or criticised. But there are many more reasons this might happen: researchers might have switched universities and their contact emails; they may have lost access to the data; may not have stored it in an easily-shareable format; or may have simply been too busy with other work and inundated with emails.

These aren’t excuses, but they’re reasons why the expectation – that readers will be able to access data if they contact researchers by email months or years after the study is published – is baffling.

Why not make researchers publish their data along with their papers at the outset, on any of the data platforms that exist today? And if there are privacy concerns, take steps and build tools to anonymize that data or store summary data that isn’t identifiable.2

Data management is a crucial part of scientific research today that doesn’t get the attention it deserves, and in an ideal world, scientists would work in teams with specialists who had the skills to make this happen smoothly and maintain it in the longterm.

#3: How to save over a million lives

Podcast: Sir Martin Landray on saving over a million lives (BBC Radio 4, 2022)

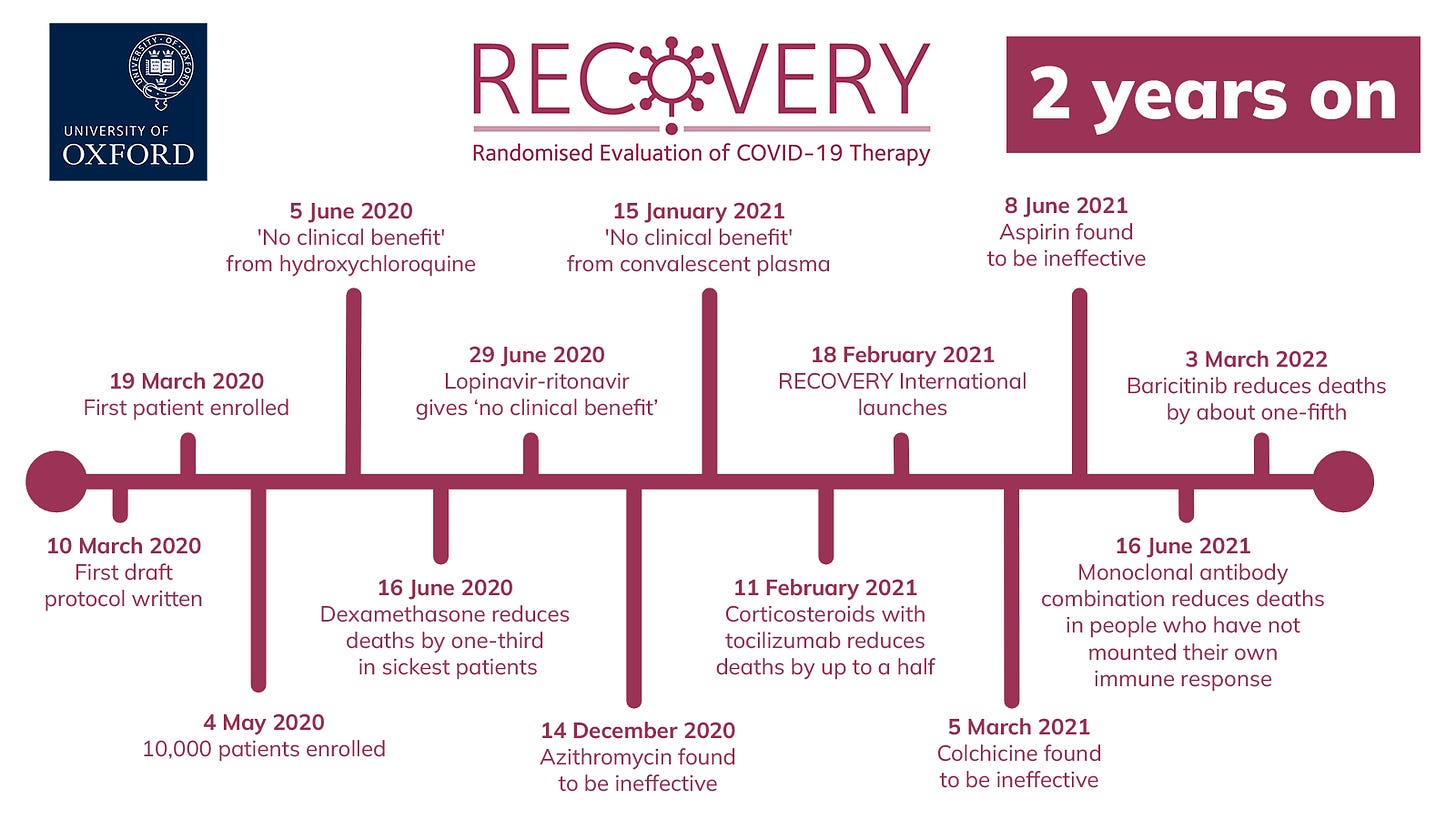

I recently listened to this interview with Sir Martin Landray, who led the large RECOVERY trial in the UK, which tested the benefits of potential Covid treatments. It was actually one giant randomized controlled trial that recruited patients from the UK and allocated them to receive different drugs, essentially running several trials together.

It was the first study to find that the drug dexamethasone worked, which is estimated to have saved over a million lives by March last year. But the trial also found several other effective drugs like tocilizumab, and it showed drugs that were ineffective, like hydroxychloroquine and azithromycin.

As I’ve written about before, RCTs have been a major advance in our ability to do good scientific research when their principles are followed through.

The key ingredients to designing a clinical trial in practice, he explains, are to make it simple and streamlined – making it easy for people to enrol and for scientists to measure clear outcomes that are already routinely collected.

#4: Major scandals in the amyloid hypothesis of Alzheimer’s disease

Article: Blots on a field? (Charles Piller, 2022)

There’s a big new story in Science today, on fabrication in landmark papers, published in the 2000s and 2010s, of the amyloid hypothesis of Alzheimer’s disease.

The amyloid hypothesis – that Alzheimer’s disease is caused by deposits of the amyloid beta protein in the brain – has been around for much longer. But these papers claimed to find the molecule that was directly responsible for cognitive decline in rat models of the disease: an ‘oligomer’ called amyloid-beta star 56 (Aß*56).

They were considered groundbreaking findings. But even though few other groups were able to find the same purified oligomer in brain tissue, and even though no drugs or treatments that targeted the amyloid protein have worked in clinical trials, the results of these papers continued to boost the amyloid hypothesis.

This continued until they were called into question by other scientists and users of the website PubPeer – where people can post public comments and concerns about research papers. Here, the platform was used to point out that many images in these papers appeared to be tampered and manipulated to show the right kinds of results.

The whole story is fascinating, and to me underscores the vast potential of what peer review could be versus what it is today. We may not think of image sleuthing, reproducing the results, or investigating conflicts of interest as the scope of peer review, but why not?

The quality of research – the ‘stamp of approval’ that peer review is supposed to give – doesn’t just depend on whether someone’s written up a paper nicely, but its entire integrity.

Alzheimer’s is one of the leading causes of death today, estimated to cause 5% of deaths in people over 70 years old in the US; and the NIH spends about half of their overall funding for Alzheimer’s disease on amyloid research.

Science reform might sound like a niche topic, or something some scientists want to avoid drawing attention to in order to maintain trust in science. But this shows that it’s imperative that we do, and meet the standards that people expect from us.

Moving resources away from ideas that haven’t worked, pinning down theories that actually lead somewhere or medicines that actually benefit people, has saved millions of lives and has the potential to save countless more.

More links

Of course I have to recommend everything else in the latest issue of Works in Progress:

The maintenance race by Stewart Brand – on the thrilling story of the first around-the-world yacht race, a deadly contest, and what it tells us about the importance of maintenance to civilisation.

We don’t have a hundred biases, we have the wrong model by Jason Collins – an excellent summary of behavioural economics research today and what it will take to move it forward.

The street network by my very talented colleagues at Stripe Press – a documentary about a DIY internet created by Cuban gamers that then served the entire island.

The decline and fall of the British economy by Davis Kedrosky – an insightful piece on how America overtook Britain a century ago.

Reclaiming the roads by Carlton Reid – on the fascinating history of roads and the effects of them being dominated by cars today.

Age of the bacteriophage by Léa Zinsli – on the great potential of using phage therapy to fight back against antibiotic resistance.

Derek Lowe on spectroscopy, colour and how the Webb telescope images were created.

Mark Koyama and Jared Rubin’s great podcast interview on how the world became rich.

Kelsey Piper on the deworming debate. I have stronger opinions on this, and maybe I’ll write about the topic soon.

But that’s all for this week!

As ever, please let me know if you spot any mistakes or think I’ve missed anything important3 and I hope you’ll subscribe if you haven’t already.

See you next time :)

– Saloni

I came up with this title in the shower and it made me laugh a lot.

These practices have been commonly used in fields like genomics for decades, as a result of the ‘Bermuda principles’ that scientists developed during the Human Genome Project.

I feel like the Discovery trial is wildly underrated. The RNA vaccines got the Breakthrough prize (very deservedly), and yet although the Discovery trial saved maybe upwards of a million people, they don't seem to have won anything. Given the problems with basic science generally - e.g. everything you discuss in the peer review section, the amyloid Alzheimer's probable-fraud - maybe it's a bad idea for the scientific world to just ignore this extremely well-run RCT in favour of the fancy new thing*. Why isn't everyone screaming about how basic research - performed very well - can have almost as big an impact?

*the fancy new thing is excellent and probably deserves the Nobel too.

Great piece. The peer review inefficiencies hit close to home. The best approach I have seen so far has been with https://openreview.net/. I had a nice experience with it, but was a bit unnerving at first (this was in machine learning, where the community has more open to disrupting established models) Long term, I think this system is far more trustworthy if we combine it with Arxiv, instead of the paywall leeches that have a suck the life out of academia today. Anytime I think about peer review, I feel it can't be fixed unless we address the issue of publicly funded research benefiting private gatekeepers.